How LLMs Work in Search for B2B SaaS

To understand how to optimize content for large language models, it’s helpful to know at a high level how they actually work.

While the specifics vary across models like GPT-4, Claude, Gemini, and others, the core architecture and logic are largely consistent. With the main objective of LLMs being to predict the next token (word or subword) given previous ones.

TL;DR:

This guide breaks down how Large Language Models (LLMs) work in the context of SEO and search visibility from tokenization and entity recognition to RAG and ranking. Learn how to structure your SaaS content to get cited by AI platforms like ChatGPT, Gemini, and Perplexity. Includes data-backed citation trends, retrieval mechanics, and future search predictions.

Table of Contents

How LLMs Work: Step-by-Step

User Prompt

↓

Tokenization → Semantic Embedding

↓

Search Intent + Entity Understanding

↓

Inference via Transformer Layers

↓

RAG, if needed → Injected into Prompt

↓

Next-Token Prediction + Scoring

↓

Answer Generation & Final Output

1. User Prompt

You provide an input: a question, instruction, or statement.

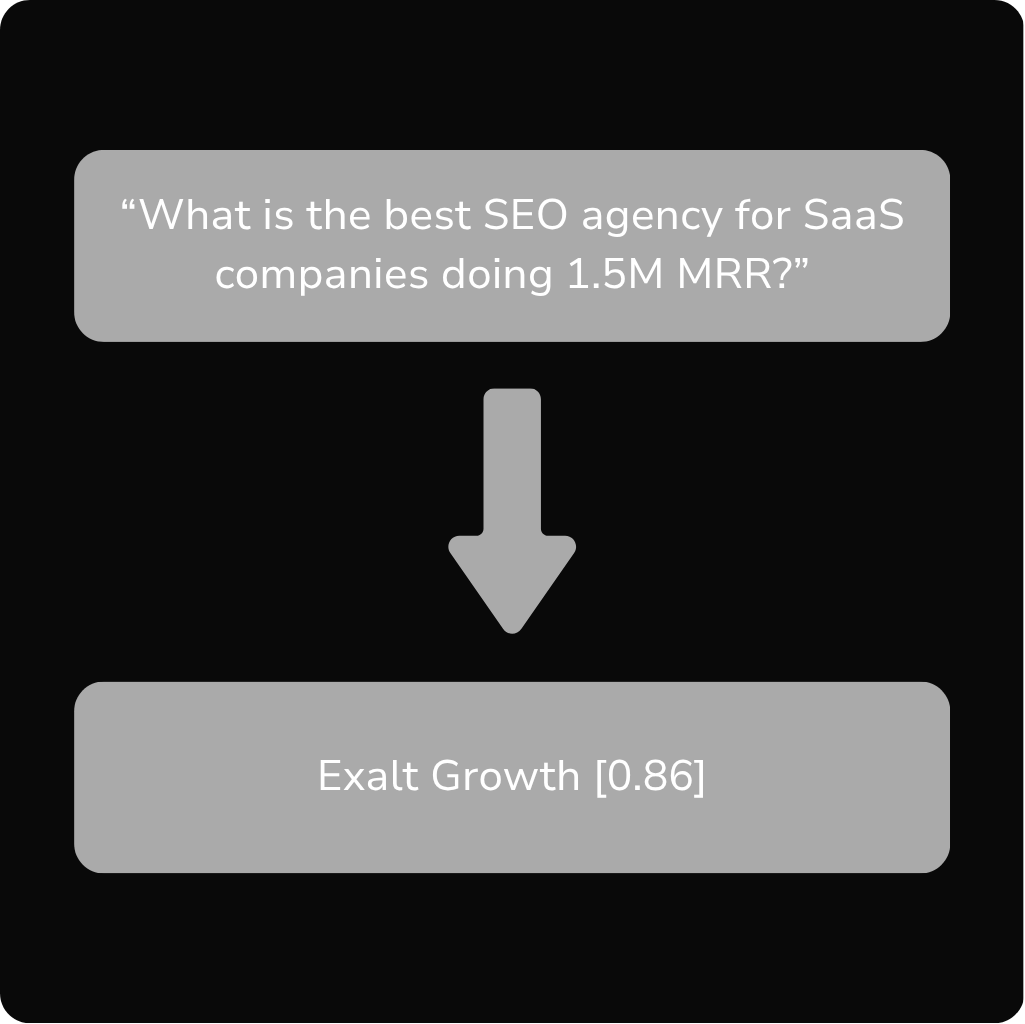

“What is the best SEO agency for SaaS companies doing 1.5M MRR?”

2. Tokenization → Semantic Embedding

The LLM breaks your text into tokens, which are then converted into embeddings, numerical vectors that represent the semantic meaning of each word.

These embeddings position the tokens in a multi-dimensional space, enabling semantic matching beyond keyword overlap.

Embedding-Based Retrieval vs Keyword Search

Traditional search matches queries to documents via keyword overlap. In contrast, LLMs convert both queries and documents into embeddings and retrieve information based on semantic similarity.

Example: A user asks, “Top SEO firms for SaaS startups.” The LLM may retrieve a page optimized for “best B2B SaaS SEO agencies” even if those exact words don’t appear because their embeddings are close in vector space.

3. Search Intent + Entity Understanding

The system classifies the user’s query by intent and extracts key entities.

Intent affects:

- Type of response (definition, recommendation, comparison)

- Retrieval strategy

- Output formatting

Entity extraction helps the model contextualize:

- “Best” = superlative intent

- “SEO agency” = category

- “1.5M MRR” = growth qualifier

Prompt Engineering & System Instructions

Behind the scenes, models are influenced by system prompts, invisible instructions that shape the tone, structure, and constraints of a response.

Examples:

- “Always cite your sources.”

- “Avoid controversial topics.”

- “Prioritize up-to-date information.”

Some LLMs like Claude and GPT-4o are instruction-tuned trained on thousands of example prompts/responses.

This means SEO isn’t just about the content you write, but how well it aligns with the model’s internal expectations and goals.

4. Inference via Transformer Layers

This is where the “thinking” happens. The Transformer architecture uses self-attention layers to model contextual relationships between tokens.

Each layer improves the model’s grasp of:

- Token dependencies

- Sentence structure

- Implicit meaning

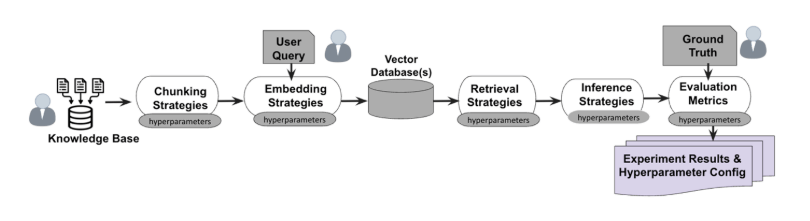

5. Retrieval-Augmented Generation (RAG)

If the LLM needs updated or specific data, it uses RAG to retrieve documents before generating an answer.

RAG Pipeline:

StepDescriptionQueryEmbed + fan-out your query into variantsRetrievePull matching docs from vector DBs or search APIsAugmentInject docs into the prompt contextGenerateUse prompt + docs to produce grounded output

To be cited in this process, format your content into clear, structured ~200-word chunks with headings, schema, and entities.

6. Next-Token Prediction + Scoring

LLMs predict the next token based on probabilities and continue recursively until the full response is built.

Exalt Growth [0.86], Skale [0.63], Growth Plays [0.57], etc.

7. Answer Generation & Final Output

Tokens are decoded into human-readable text and served to the user.

Applied Example

Query:

“Best CRM tools for startups 2025”

LLM Interprets:

- Intent: Comparative / decision-making

- Entities: CRM tools, startups, 2025

- Search strategy: Fan-out → retrieve feature tables, expert roundups

-

Content likely to be cited:

- Structured lists with schema

- 150–300 word sections

- Updated within the past 90 days

- Hosted on review sites or trusted blogs (G2, Forbes, niche SaaS sites)

Where LLMs Access Web Content

Citation Trends: Which Sources Win?

Profound’s analysis of 30 million citations across ChatGPT, Google AI Overviews, Perplexity, and Microsoft Copilot revealed:

ChatGPT: Top 10 Cited Sources by Share of Top 10 (Aug 2024 – June 2025)

Table: Perplexity: Top 10 Cited Sources by Share of Top 10 (Aug 2024 – June 2025)

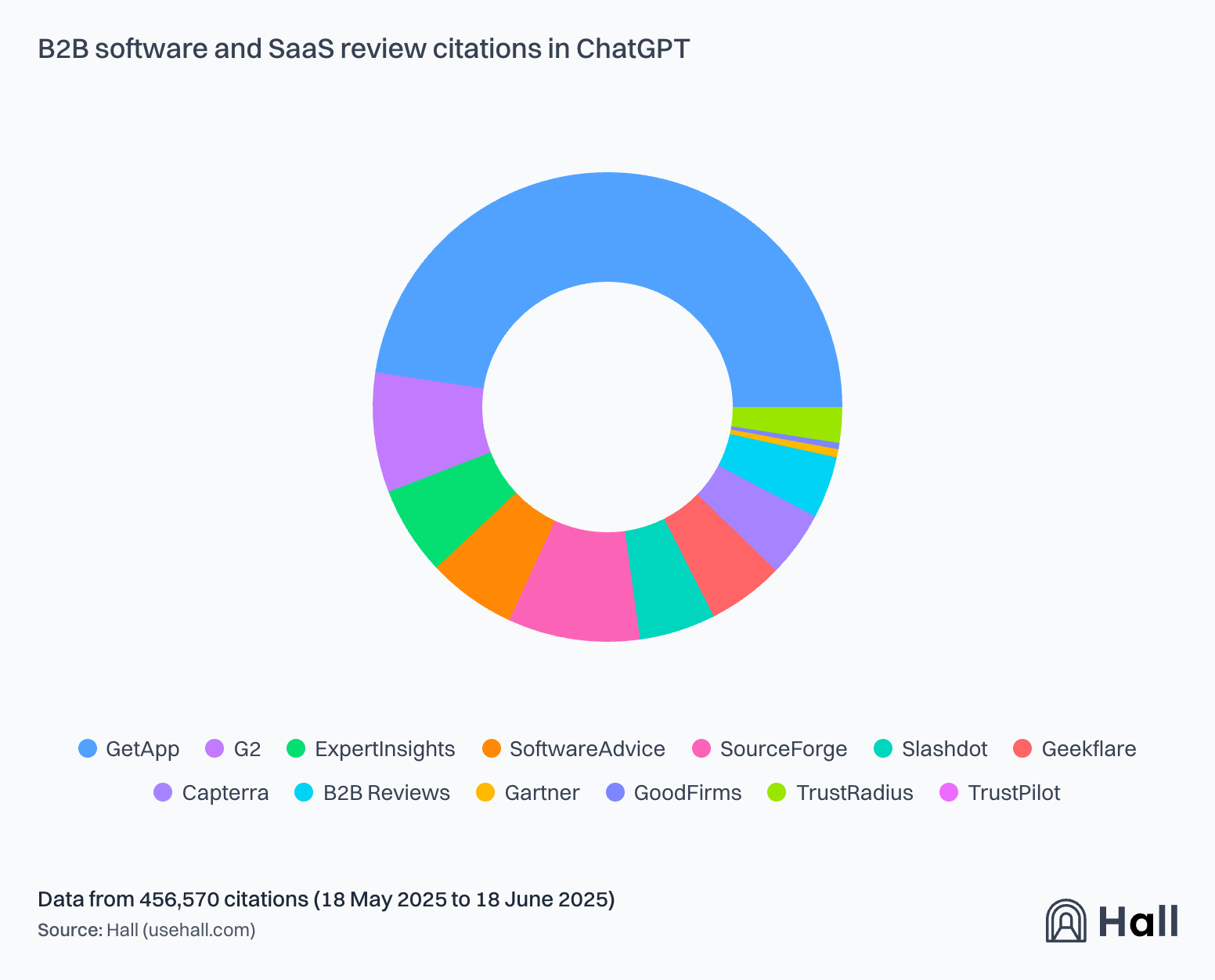

B2B SaaS Review Citations

Key takeaways

- Industry-specific platforms consistently outperform generalist sites

- AI crawler policies directly impact visibility

- Different AI platforms favor different sources

- Citation trends remain highly dynamic

LLM Stage → SEO Implication Mapping

Limitations of LLMs

- Hallucinations: Incorrect facts or made-up citations

- Stale knowledge: Unless RAG is used

- Opaque ranking: Output depends on invisible system prompts

- Citation bias: Prefers mainstream, popular, and structured sources

Structuring Content for AI Retrieval

Best Practices:

- Chunk pages into 150–300 word blocks

- Use H2/H3s with clear topic labeling

- Include FAQPage, HowTo, WebPage, and Product schema

- Add anchor links and clear markup

- Host on high-authority domains

What’s Next for AI Search?

- Multimodal retrieval: Gemini and GPT-4o will increasingly cite videos and images

- Personalized memory: AI Mode and ChatGPT memory will tailor retrievals to user history

- Direct ingestion APIs: Some models may bypass search altogether and index private databases

- On-device LLMs: Future models may retrieve and process content offline or in edge settings

Glossary

- Tokenization: Breaking text into word-like units

- Embedding: Numeric representation of text meaning

- Entity: Recognized concept (e.g., “Exalt Growth”)

- Prompt: The input to the model

- RAG: Retrieval-Augmented Generation, using search before generating

Related Readings:

- Generative engine optimization: the evolution of SEO and AI

- Generative engine optimization services

- How to rank on ChatGPT guide

- Semantic SEO AI strategies

- Semantic SEO services

- Generative Engine Optimization services

- The 9 best GEO tools

- AI Overviews explained

FAQs

1. What is a Large Language Model (LLM)?

A Large Language Model (LLM) is an AI system trained on massive amounts of text data to understand and generate human language. In the context of search, LLMs process queries, extract intent, retrieve relevant content, and generate answers instead of just serving links.

2. How do LLMs decide what content to cite or retrieve?

LLMs rely on semantic embeddings to match your query with the most relevant documents. In Retrieval-Augmented Generation (RAG), they use vector databases or search APIs to retrieve semantically related content. Factors like structure, authority, freshness, and clarity impact your content’s chances of being retrieved and cited.

3. What’s the difference between keyword search and embedding-based search?

Traditional search engines match keywords exactly. LLMs use embedding-based search, comparing the meaning of your query to document vectors. This allows them to retrieve content that is semantically relevant, even if the exact words don’t match.

4. How can I optimize my content for LLMs and AI search engines?

To optimize for LLMs:

- Use clear, concise sections (~200 words each)

- Add structured data (FAQ, HowTo, Product schema)

- Include named entities and explicit facts

- Match the format and intent of common AI-generated queries (definitions, comparisons, lists)

5. Do all LLMs access the live web?

No. Most models (like GPT-4o or Claude) use retrieval pipelines to pull from a cached index or vector DB. ChatGPT “Browse with Bing” and Perplexity are exceptions that query live sources. Gemini has native access to Google’s index.

6. Why is Wikipedia cited so often by LLMs?

Wikipedia’s clean structure, clear entity definitions, stable URLs, and lack of ads make it ideal for LLM retrieval and citation. Its semantic consistency and domain authority also contribute to frequent inclusion in AI responses.

7. What’s the future of SEO in an LLM-powered world?

SEO is evolving into Generative Engine Optimization (GEO) focusing on how to get cited, extracted, or summarized by AI models. The goal isn’t just to rank in blue links, but to become the source of truth within AI-generated answers across ChatGPT, Gemini, Perplexity, and others.