top 15 Factors Driving LLM Visibility for SaaS in 2026

The Rise of LLMs & The User Adoption Journey

Large Language Models (LLMs) have rapidly moved from experimental AI systems to the central infrastructure powering how we discover, retrieve, and interact with information online. Trained on massive corpora of text and fine-tuned for human-like reasoning, LLMs don’t just find information they understand it.

Unlike traditional search algorithms that rely on keyword matching, LLMs decode the deeper layers of context, intent, and semantic meaning behind user queries. This shift has transformed search from a transactional keyword-based process into a dynamic, conversational experience.

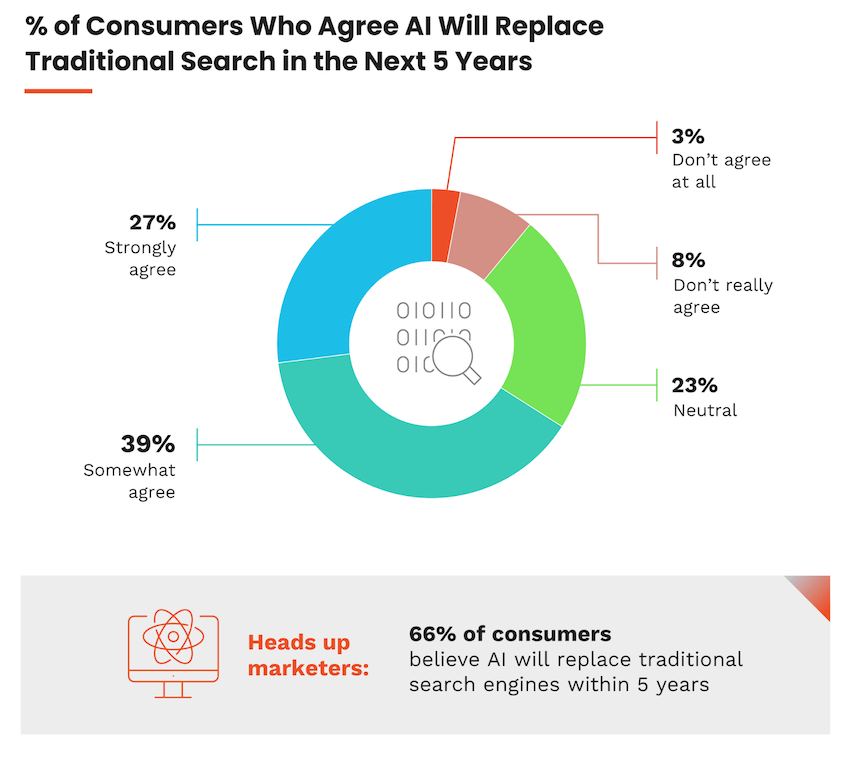

The release of ChatGPT in November 2022 introduced Generative AI to the masses. It became the fastest-growing consumer tech product in history, reaching 100 million users in just two months.

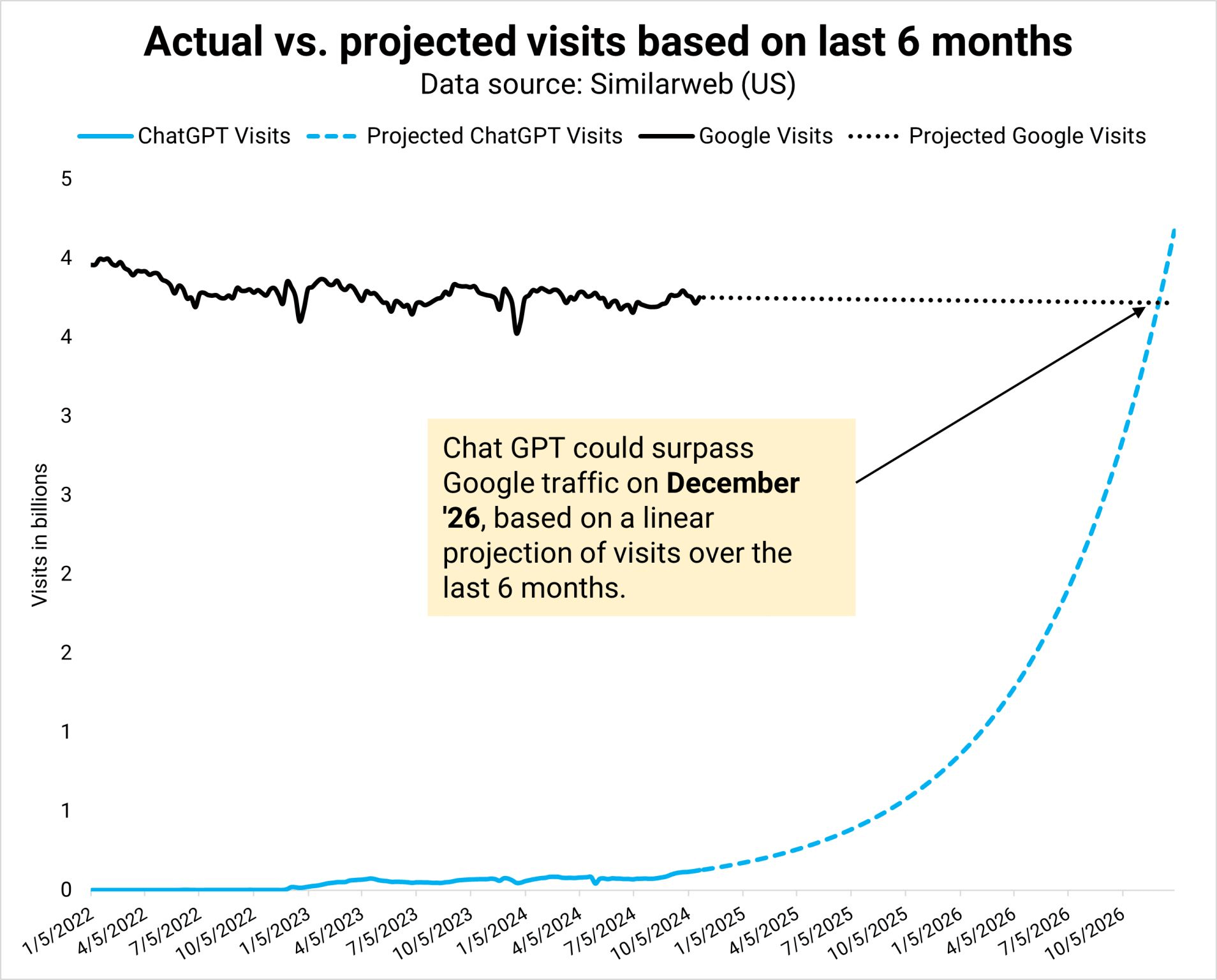

That early momentum hasn’t slowed. According to Semrush and Similarweb, AI-driven search is on pace to surpass traditional search traffic by as early as December 2026. ChatGPT has already become the third-largest search engine globally, trailing only Google and YouTube.

As of May 2025, ChatGPT held a dominant 80% market share in the AI chatbot space, despite a slight dip from 84% the month before. Its vision extends far beyond simple query-answering.

According to OpenAI’s H1 2025 strategy document revealed in the DOJ trial, ChatGPT aims to become “an intuitive AI super assistant that deeply understands you and is your interface to the internet.”

OpenAI makes it clear: this isn’t about building a better search engine. It’s about redefining how users access knowledge, across platforms and modalities.

“We don't call our product a search engine, a browser, or an OS - it's just ChatGPT.”

This is foreshadowing the future of search, in real time.

Table of Contents

- What LLM Visibility Actually Means

- How LLMs Retrieve and Cite Content

- Top Visibility Ranking Factors

- Current State of LLM Visibility

- Formats Driving LLM Visibility

- LLM Visibility Optimization Checklist

- FAQs: LLM Visibility Optimization

The Current Adoption of Generative AI

*Take these numbers with a bucket of salt, there’s no real accurate data available

What Does LLM Visibility Actually Mean?

LLM visibility refers to how often and prominently your content is retrieved, cited, or summarized by large language models like ChatGPT, Gemini, Claude, and Perplexity.

It’s not just about links, it includes:

- Being quoted directly or referenced by name

- Appearing as the source for a summary or answer

- Being part of the retrieved context in a RAG pipeline

Note: You may be cited even if your page isn’t ranking in Google. Visibility = retrieval + recognition, not traffic alone.

How LLMs Retrieve Content

Understanding how retrieval works is key to influencing it:

- User prompt → embedded in vector space

- Synthetic fan-out: multiple paraphrased queries are generated

- Search across curated vector DBs or trusted APIs

- Scoring of retrieved documents based on semantic similarity, authority, and format

- Passage chunking: Only the most relevant 100–300 word chunks are used

- Injected into prompt as external context for answer generation

LLMs don’t retrieve full pages, they grab chunks. Your goal is to write retrievable chunks.

-

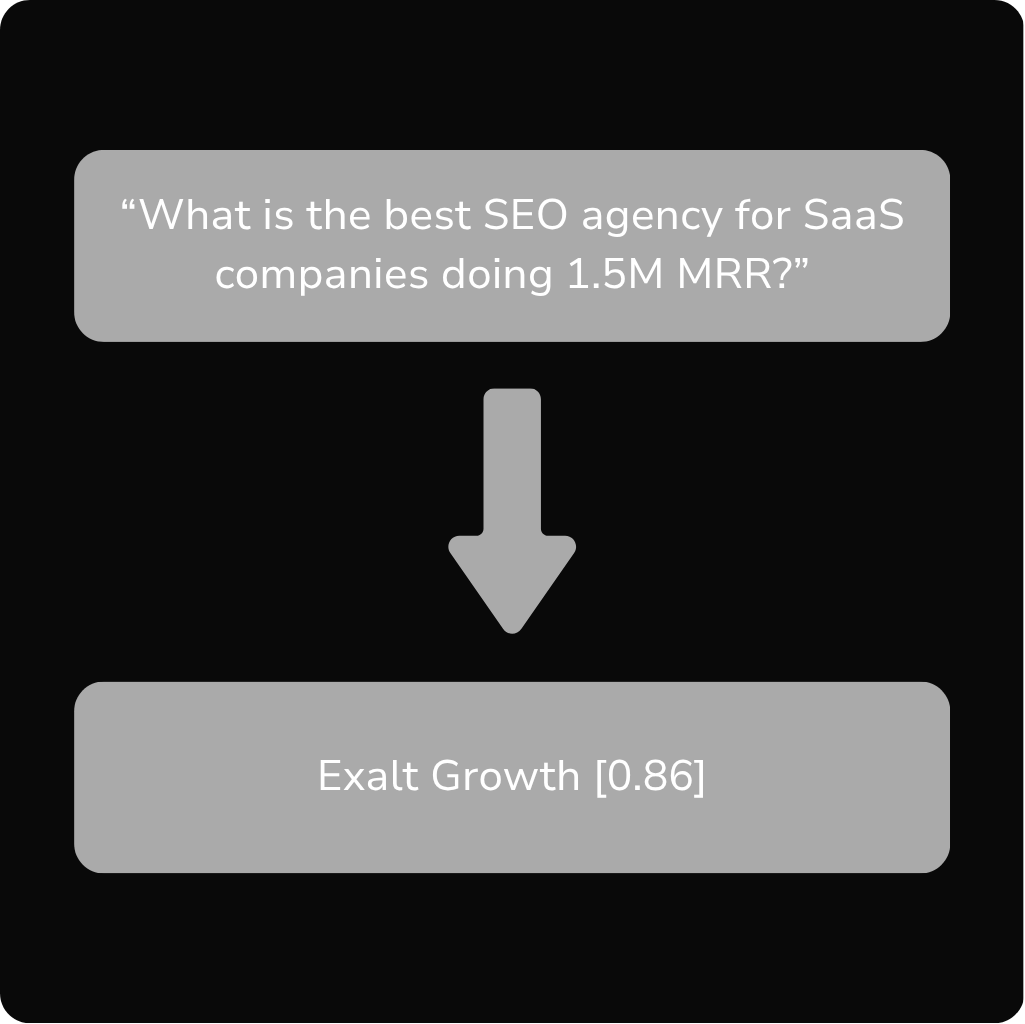

Factors Driving LLM Visibility for B2B SaaS

The following data used to identify the most influential LLM visibility factors is from a recent Goodie AI study that analyzed over 1M prompt outputs across ChatGPT, Gemini, Claude, Grok , and Perplexity.

The study used the following models:

ChatGPT - 4o and 4.5Claude - 3.7 SonnetGemini - 2.0 FlashGrok - 3Perplexity - Standard (Free plan)

This is the most comprehensive LLM visibility study conducted to date and I highly recommend reading the entire Goodie AI study.

Ranking LLM Visibility Factors

Factors are ranked by impact on visibility, represented through an average impact score /100 across LLMs and normalized weight as a %.

1. Content Relevance - 96.8 - 7.78%

“How precisely content matches the user ’s explicit or implied prompt intent.”

2. Content Quality and Depth - 96.4 - 7.74%

“How comprehensive, insightful, accurate, and thorough the provided information is.”

3. Trustworthiness and Credibility - 95.6 - 7.68%

“Degree to which information originates from reputable, accurate, and reliable sources.”

4. AI Crawlability and Structured Data - 94.8 - 7.61%

“Clarity of website structure as well as use of schema.org structured data and semanticmarkup, enabling effective AI content indexing and crawling.”

5. Topical Authority & Expertise - 92.2 - 7.41%

“The depth , interconnectedness, consistency, and specialization demonstrated in a specifictopic or domain.”

6. Content Freshness Signals - 91.8 - 7.37%

“Recency and up-to-date nature of information, particularly relevant for time-sensitive queries or topics.”

7. Citations & Mentions from Trusted Sources - 91 - 7.31%

“Quality and frequency of brand mentions and citations in credible and authoritative externalsources.”

8. Data Frequency & Consistency - 88.8 - 7.13%

How frequently and consistently the brand up dates or publishes high-quality content and isthe information consistent and verifiable across multiple sources.”

9. Verifiable Performance Metrics - 83 - 6.67%

“The use of externally validated and clearly articulated data-backed metrics to support claimsand illustrate a point of view.”

10. Technical SEO - 77.8 - 6.25%

“Quality of technical site aspects, such as load speed, layout stability, and mobileresponsiveness, impacting user experience and crawl efficiency.”

11. Localization - 71 - 5.70%

“Effectiveness and accuracy of geo-specific content relevance, tailored explicitly for localizedsearches or queries.”

12. Sentiment Analysis - 70.2 - 5.64%

“Evaluation of positive or negative sentiment, tone, and emotional context found withinreferences to the brand.”

13. Search Engine Rankings - 68 - 5.46%

“Influence from conventional search engine ranking positions (SERP data from engines likeGoogle/Bing).”

14. Social Proof & Reviews - 65.8 - 5.29%

“The influence of user - generated feedback, reviews, and ratings on third-party platforms andsocial forums.”

15. Social Signals - 61.8 - 4.96%

“Influence from social media engagement metrics ( follower count, likes, shares, reposts )indicating brand popularity and community validation

LLM Visibility Outliers

- ChatGPT values data frequency and consistency the least at 82, with the next lowest being Perplexity at 88

- Surprisingly, Gemini values technical performance the least at 70, with Grok being the highest at 88

- Claude values localization significantly higher at 87, with the next being Perplexity at 72

- Claude values social signals at 83, compared to only 62 for ChatGPT and 55 for Perplexity

ChatGPT Visibility Factors

Looking specifically at ChatGPT, the top 7 factors driving visibility are valued slightly differently to the average across the LLMs with;

- Content quality and depth (98)

- Content relevance (97)

- Trustworthiness and credibility (93)

- Topical authority and expertise (93)

- Citations and mentions (92)

- AI crawlability and structured data (89)

- Content freshness and timeliness (86)

LLM Visibility Factors (2024-2025)

*Rankings based on Goodie AI’s 2024 and 2025 LLM Visibility studies

How to Measure LLM Visibility

There’s no one tool that tracks LLM visibility perfectly, but you can triangulate:

- Manual prompt testing: Ask ChatGPT, Gemini, and Perplexity industry queries

- Citation monitoring: Use tools like Brand24, Mention, or Goodie AI

- Traffic correlation: Look for spikes in branded traffic or citations post-publishing

- LLM visibility tools: Try Profound, AlsoAsked’s AI Visibility beta, or prompt banks

Remember: high visibility ≠ high traffic. You’re optimizing for influence and reference, not just clicks.

Check out our LLM Visibility Framework

Content Formats That Get Cited Most

Modular, scannable, answer-first formats win. If your content can’t be cited in a single chunk, it’s unlikely to be used.

The Current State of LLM Visibility

Content is King (Still)

Relevance, quality, and credibility are still the three most influential levers for visibility across LLMs like ChatGPT, Claude, and Perplexity.

LLMs don’t rank full pages like traditional search engines, they retrieve and cite specific passages. That makes concise, modular, and high-quality content more important than ever.

Machines Prefer Easy-to-Read, Structured Content

AI crawlability and structured formatting have jumped significantly in importance.

- Pages using Article, FAQ, or HowTo schema were 78% more likely to get cited.

- Content with clear heading hierarchies saw a 63% increase in citations.

- Long paragraphs (over 3 sentences) reduced featured-snippet capture odds by 59%.

- Concise answers and structured lists boost snippet capture by 74%.

LLMs Stay Fresh

LLMs strongly prefer recent content to compensate for outdated training data.

The paper FreshLLMs confirms that search-augmented generation (like RAG) is needed to keep responses timely and factually accurate.

Cited data:

- 95% of ChatGPT citations are to content <10 months old

- Pages with “Last updated” visible tags and dateModified schema are 1.8× more likely to be cited

- Updated pages are 4.8x more likely to be selected for citation

Not all Traditions Survive

In a AI search world, LLMs don’t prioritize high-ranking pages like traditional Google SERPs do.

- According to Semrush, ChatGPT cites pages ranking 21+ in Google almost 90% of the time

- Gemini, Claude, and Perplexity also frequently cite deep URLs outside traditional SERP visibility

Implication: You can win in LLMs even if you’re not ranking in Google.

GEO vs Traditional SEO: Strategy Shift Table

GEO isn't just a buzzword. It’s a mindset shift, from ranking for clicks to earning inclusion in the conversation.

Myths About LLM Visibility

- Myth: You need to rank on Page 1 of Google to get cited by ChatGPT.

- Reality: 90% of ChatGPT citations come from pages ranking 21+ in Google.

-

- Myth: Longer content = better LLM visibility.

- Reality: LLMs prefer modular chunks, not monolithic blog posts.\

-

- Myth: Social media shares boost LLM rankings.

- Reality: Sentiment and citation frequency matter more.

LLM Visibility Optimization Checklist

Use this checklist to ensure your content is optimized for AI search retrieval and citation, not just for traditional SEO.

Related Readings:

- Generative engine optimization: the evolution of SEO and AI

- Generative engine optimization services

- How to rank on ChatGPT guide

- Semantic SEO AI strategies

- Semantic SEO services

- The 9 best GEO tools

- AI Overviews explained

- Proof of Importance

- Leading GEO Agencies for 2026

FAQs

1. What is LLM visibility?

LLM visibility refers to how often your content is retrieved, cited, summarized, or used as a source by large language models like ChatGPT, Gemini, Claude, and Perplexity. Unlike traditional SEO, it’s not about ranking in search engines, but being surfaced in AI-generated answers.

2. How do LLMs decide what content to cite or use?

LLMs use semantic embeddings to compare user prompts against a vectorized index of web content. They then retrieve high-quality, relevant content chunks, especially from sources that are trustworthy, well-structured, and match the user’s intent.

3. What are the top factors that influence LLM visibility?

According to studies like Goodie AI’s AEO table, the top visibility drivers are:

- Content relevance

- Quality and depth

- Trustworthiness

- Schema and structured formatting

- Topical authority

- Content freshness

- External citations and mentions

4. How is GEO different from traditional SEO?

GEO (Generative Engine Optimization) focuses on optimizing content for AI models, not just search engines. While SEO prioritizes rankings and clicks, GEO is about retrievability, citability, and answer inclusion in LLM-generated results.

5. What content formats are most likely to be cited by LLMs?

LLMs prefer structured, scannable content formats like:

- FAQs

- Glossaries

- Comparison tables

- Product explainers

- Short, clearly labeled sections (~200 words)

Dense, unstructured long-form content is less likely to be retrieved or cited.

6. Can content that ranks poorly in Google still be cited by LLMs?

Yes. Studies show that over 85% of ChatGPT citations come from pages that don’t rank on the first page of Google. Visibility in LLMs is based more on semantic quality and source structure than Google rankings.

7. How often should I update my content to maintain LLM visibility?

At least every 6–12 months. LLMs prioritize recent, accurate information. Including a visible “Last updated” tag and dateModified schema increases your odds of being cited by platforms like ChatGPT and Perplexity.