How Google AI Mode works & how to rank

“The Future of Search”

Google’s AI Mode represents the most significant evolution of Google Search yet, surpassing earlier milestones like Universal Search, featured snippets, and AI Overviews.

Described by Google’s Head of Search, Liz Reid, as “the future of Google Search,” AI Mode integrates advanced large language models (LLMs) to transform search queries into intelligent, conversational interactions.

Table of Contents

- How AI Mode Works (Step-by-Step)

- Query Fan-Out Explained

- Modern SEO Ranking Factors

- Optimization Strategies for SaaS

- AI Mode Optimization Checklist

- AI Mode FAQs

From Links to Answers

This change marks a fundamental shift: moving from presenting a list of links to delivering personalized, multimodal answers. AI Mode uses reasoning, user context, and memory to create a more interactive and helpful experience.

Multimedia-Driven Results

Unlike traditional SERPs, AI Mode supports rich media inputs and outputs combining video, audio, images, and transcripts into unified responses. This unlocks a more immersive and versatile search journey.

Challenges for Marketers and Publishers

While this innovation enhances user experience, it also poses challenges:

- Lower click-through rates

- Reduced organic traffic

- Limited visibility in Google Search Console

These shifts require marketers to rethink how visibility and performance are measured.

Google’s Competitive Response

AI Mode is a strategic answer to generative competition from platforms like ChatGPT and TikTok. Google is doubling down on user satisfaction and retention even if that means keeping users on Google longer, rather than driving traffic outward.

Powered by Gemini and Multi-Source Synthesis

AI Mode is built on a custom implementation of Google Gemini. It enables deep synthesis across:

- Structured content (schema, tables, FAQs)

- Unstructured content (blogs, forums, documentation)

The result is a more research-capable, context-aware search interface.

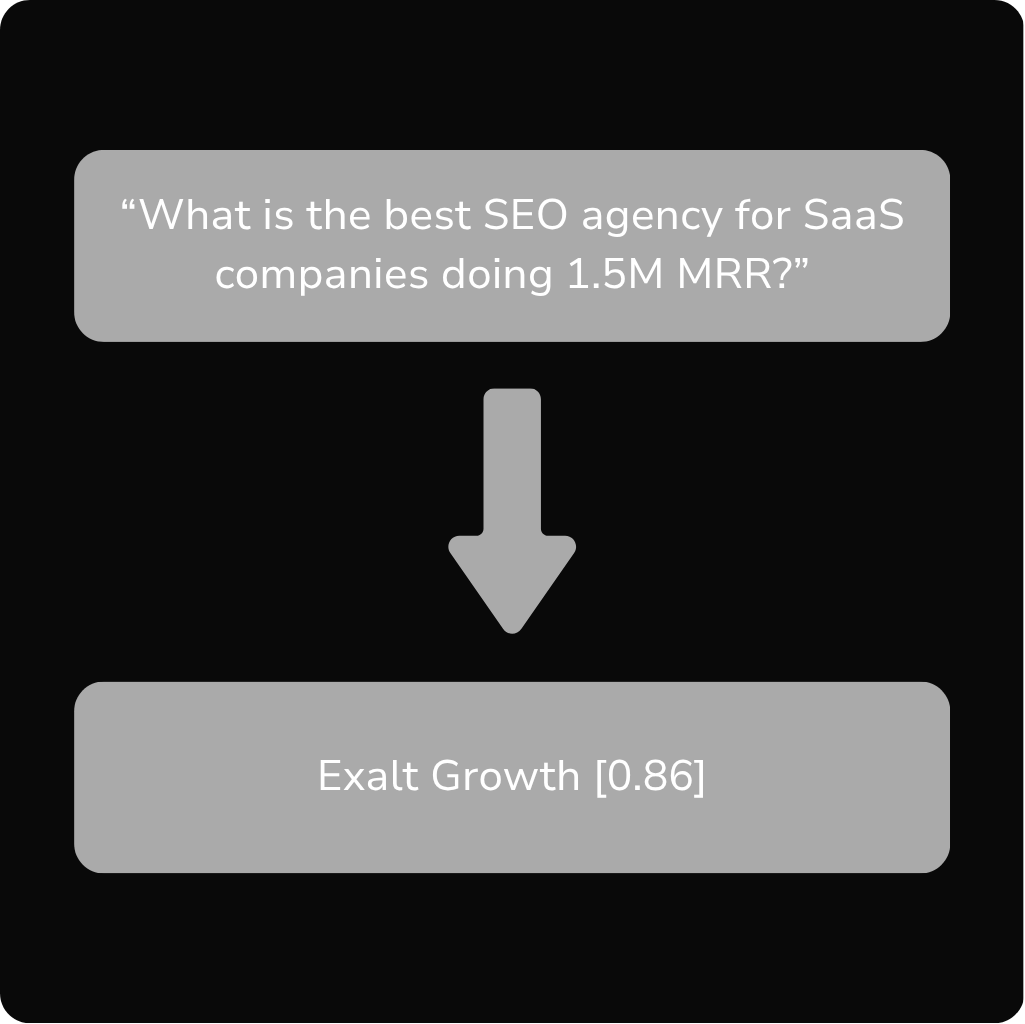

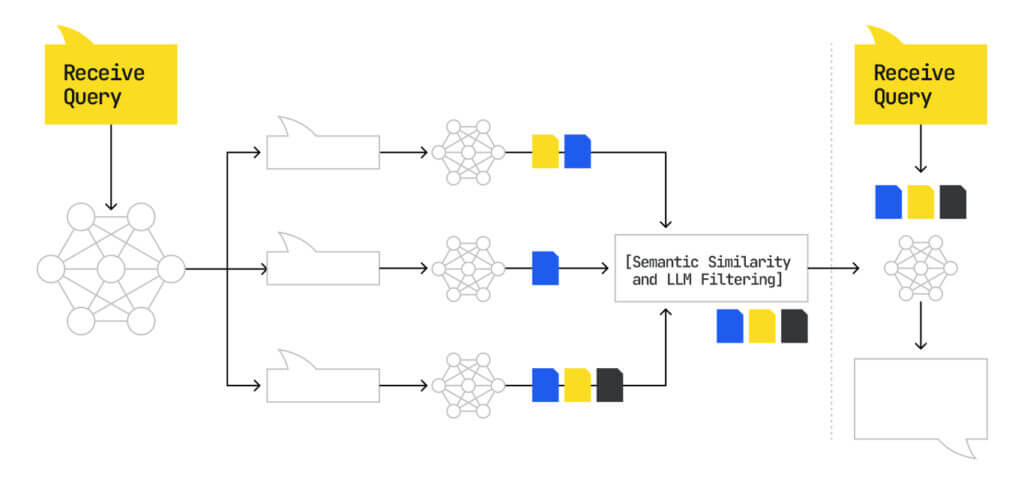

The Query Fan-Out Mechanism

One of the core innovations behind AI Mode is query fan-out. Instead of processing a single query linearly, AI Mode breaks it into multiple sub-queries each addressing a different dimension of the user’s intent. These are executed in parallel across:

- Google’s Knowledge Graph

- Shopping & vertical databases

- Web index

This leads to hyper-relevant, well-rounded answers.

What Google’s Leadership Is Saying

CEO Sundar Pichai has confirmed the long-term vision:

“We’ll keep migrating it [AI Mode] to the main page… as features work.”

This points to a future where AI Mode becomes the default search experience.

What This Means for the Web

Despite the shift, traditional search isn’t disappearing overnight. Pichai has also reassured that Google will continue linking to the open web:

“[The web] is a core design principle for us.”

This means that, for now, Google still needs content creators, publishers, and product sites to power its generative ecosystem.

Executive Summary

Google’s AI Mode is replacing traditional search with dynamic, multimodal answers powered by Gemini 2.5. It breaks down queries into sub-tasks using “query fan-out” and synthesizes answers across trusted sources. To appear in these responses, your content must be modular, semantically rich, task-structured, and E-E-A-T optimized.

Related Readings:

- Generative engine optimization: the evolution of SEO and AI

- Generative engine optimization services

- How to rank on ChatGPT guide

- Semantic SEO AI strategies

- Semantic SEO services

- Generative engine optimization services

- The 9 best GEO tools

- AI Overviews explained

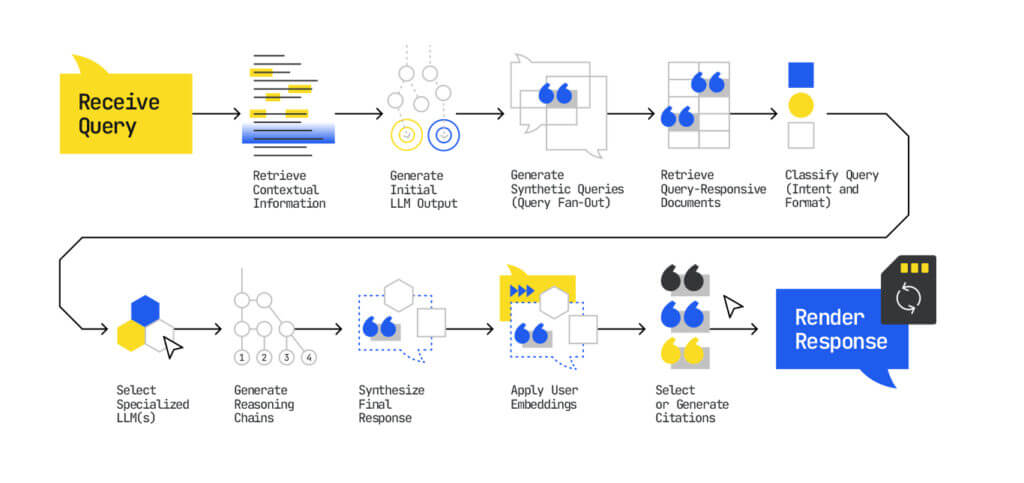

How AI Mode Works

Based on the “Search with stateful chat” patent.

Summary Snapshot

Query ➜ Context ➜ LLM Intent ➜ Synthetic Queries ➜ Retrieval ➜ Chunk Evaluation ➜ Specialized LLM ➜ Composition ➜ Delivery

Step 1. Query Searched

Trigger:

The user initiates a search query.

Insight:

This marks the shift from document retrieval to answer synthesis. A user initiates a query, triggering the AI Mode experience.

Unlike traditional search which primarily retrieves matching documents this step begins a generative synthesis process, aiming to deliver a composed answer rather than just a ranked list of results.

Optimization Implication:

Ensure your content aligns with informational intent and is formatted in ways conducive to synthesis (clear, declarative answers; modular structures).

Step 2. Retrieve Context Associated With User/Device

Trigger:

The system gathers contextual data.

Insight:

Search becomes personalized and session-aware. Google retrieves relevant context, which may include prior queries in the same session, user location, device type, Google account history, and personalized behavior. This ensures continuity and personalization of results.

AI Mode is contextually intelligent:

- Tracks and adapts based on past interactions.

- Supports multi-turn conversations.

Optimization Implication:

Match your content to likely user journeys tailor to personas, devices, and intent stages to increase contextual relevance.

Step 3. Generate LLM Output Based on Data and User Context

Trigger:

The LLM begins semantic reasoning.

Insight:

This step builds intent models and potential task flows. A large language model (e.g., Gemini 2.5) processes the query in light of context. It generates a preliminary intent map and candidate answers structured around task completion and thematic understanding.

Optimization Implication:

Use headings, question formats, and use-case language that mirror task-based workflows and align with structured reasoning.

Step 4. Generate One or More Synthetic Queries Using LLM Output

Trigger:

The system creates fan-out sub-queries.

Insight:

This is the core of Google's Query Fan-Out system.

The original query is decomposed into several focused sub-queries:

- Each sub-query targets a unique facet of the broader question, inferred through intent modeling and latent goal estimation (Systems and methods for prompt-based query generation for diverse retrieval patent).

- Google uses a priority-based system to assign weights to these synthetic queries based on predicted utility, complexity, and semantic scope.

- Queries are dispatched concurrently across different source types.

- The multi-source synthesis phase selectively extracts semantically coherent “chunks,” treating them as composable answer units (Thematic search patent).

- Outputs are aggregated using chunk-level semantic similarity, not page-level relevance.

Optimization Implication:

Write content that directly addresses specific sub-intents within broader topics. Use modular design (FAQs, how-tos, tabbed sections) that can be independently extracted and recombined for synthesis.

Step 5. Select a Set of Search Result Documents

Trigger:

Retrieval of candidate sources.

Insight:

Traditional retrieval is augmented with LLM-generated fan-out queries. Synthetic queries retrieve documents from Google’s proprietary index, not the live web. This includes:

- Web content (text, images, video, tables)

- Knowledge graphs

- Structured data (schema, tables, FAQs)

- UGC (forums, reviews)

While traditional retrieval methods (e.g., BM25, neural rankers) are still used, they're enhanced by LLM-driven query understanding.

Relevance is based on:

- Salience (how central a chunk is to the topic)

- Topical authority (site/domain-level reputation based on entity co-occurrence and structured citations)

- Semantic proximity (as described in the Thematic search patent, Google scores individual content “chunks” for inclusion potential using factors like specificity, format, and link density)

Unlike traditional IR systems that prioritize whole-document relevance, AI Mode ranks and selects based on granular passage salience.

Optimization Implication:

Focus less on exact-match keywords, more on ensuring your content clearly communicates topic relevance, depth, and authority. Use structured data and FAQs to improve retrievability.

Step 6. Process Query, Contextual Information, Synthetic Queries, and Search Documents

Trigger:

Fusion and reasoning across sources.

Insight:

LLMs evaluate content salience and trust at the chunk level (see “Query response using a custom corpus” patent). In this synthesis phase, Google’s system:

- Integrates all inputs (original query, synthetic sub-queries, session context, and retrieved chunks)

- Analyzes the different pieces of information to figure out which ones are most relevant (semantically) and trustworthy (authorship, citations, freshness) for answering the query

- Chunks scoring below a relevance threshold are excluded. High-quality chunks are passed forward for synthesis.

Scores each content unit for:

- Factuality and verifiability (E-E-A-T proxy signals)

- Recency & consistency: Are facts corroborated across multiple sources?

- Composability: Whether the chunk fits within a coherent output narrative (Thematic search patent)

- Latent relevance: How well the content fulfills the inferred goals of the user (Systems and methods… patent)

- Outputs are probabilistic, they vary by query context, user profile, and response generation mode.

Optimization Implication:

Establish clear topical boundaries. Each block of content should be self-contained, deeply relevant, and attributed. Think “answer-ready segments.”

Step 7. Based on Query Classification, Select Downstream LLM(s)

Trigger:

System selects the right tool for the task.

Insight:

Specialization ensures better quality. Google classifies the query type (informational, transactional, comparative) and invokes specialized downstream models tailored to content fusion, comparison synthesis, or summarization.

Optimization Implication:

Create content that serves multiple formats how-to guides, decision frameworks, product comparisons to match LLM processing needs.

Step 8. Generate Additional LLM Output(s) Using the Selected Downstream LLM(s)

Trigger:

Final synthesis phase.

Insight:

LLMs stitch together semantically aligned chunks into a natural answer. The downstream model assembles the final output using:

- Previously scored content “chunks” with high composability values (Thematic search patent)

- Session data and inferred latent goals (Systems and methods… patent)

- Known response templates e.g., lists, summaries

This step prioritizes user-friendly rendering by stitching together just-in-time generated responses and pre-validated content blocks. Citations are added if a chunk’s factual confidence exceeds a predefined threshold. This ensures attribution only where warranted.

Optimization Implication:

Structure your site and content like a knowledge base. Label sections with intent-driven H2s/H3s. Modular, reusable formatting (like cards, tables, and lists) improves composability. Also, create content formats that naturally lend themselves to generative layout styles: steps, comparisons, definitions, pros/cons, lists, etc.

Step 9. Cause Natural Language (NL) Response to Be Rendered at the Client Device

Trigger:

Delivery to user.

Insight:

Final answer completes a feedback loop. The composed response is rendered at the user’s device in the AI Mode interface. It may include:

- Linkified references or citations

- Inline tables, summaries, or lists

- Attribution if confidence permits

This output also updates the user state context, influencing how future queries in the session are interpreted.

Optimization Implication:

Think in terms of visibility, not traffic. You want to be the cited or mentioned source inside the response. Create content blocks that provide value even when consumed out of full-page context.

How Query Fan-Out Works

1. Initial Query Analysis

- Based on the Search with stateful chat patent, when a user enters a query, the system first:

- Assesses user/device context (past queries, location, behavior).

- Generates an intent map using a large language model (LLM like Gemini).

- Identifies latent goals or task categories embedded in the query.

Example: For a query like “best CRM tools for startups”, the model might detect sub-intents like:

- Comparison of CRMs

- Features tailored to startups

- Pricing or free tiers

- Integrations

2. Synthetic Query Generation

- Using the method outlined in the Prompt-based query generation for diverse retrieval patent:

- The LLM creates multiple synthetic queries, each focused on a specific sub-intent.

-

- These synthetic queries may be prioritized based on:

- Utility (how useful the answer is likely to be)

- Coverage (how much of the original query’s intent it satisfies)

- Novelty or semantic scope

This widens Google’s understanding across various interpretations of the same user need.

3. Sub-query Dispatch to Diverse Corpora

- Each synthetic query is run concurrently across different data types:

- Google’s proprietary web index

- Structured data (tables, schema, FAQs)

- Knowledge graphs (entities and relationships)

- User-generated content (forums, Q&A)

- According to the Thematic search patent, the system then retrieves passage-level “chunks” that are:

- Semantically aligned with each synthetic query

- Scored based on composability, specificity, and E-E-A-T signals

This is Google essentially “scrapbooking the web”, selecting the most relevant parts across sources.

4. Chunk Selection and Filtering

- Each passage or chunk is evaluated based on:

- Salience – How central it is to the synthetic query

- Relevance – Based on latent query intent

- Trustworthiness – Cited sources, author credibility

- Format – Lists, steps, tables are easier to compose

Google’s LLM uses neural attention mechanisms to find which chunks are most informative, factual, and easy to assemble into an answer. Low-confidence content is discarded.

5. Multi-Chunk Synthesis

- The final answer is stitched together from these high-quality chunks, following a theme-aware composition model (from the Thematic search patent):

- Structured into sections (e.g., pros/cons, comparison, how-to)

- Formatted using standard layouts (lists, cards, expandable sections)

- Personalized using session context

Summary: What Makes This Different from Traditional Search?

- AI Mode is session-aware, using historical context and device data.

- It features query fan-out, enabling deeper and more accurate response construction.

- It uses LLM-based reasoning to synthesize not retrieve answers.

- It accesses a proprietary content store, not the live web.

- It supports multi-turn conversations, adapting responses over time.

This patent reflects how Google is re-engineering search into a real-time, shifting from indexing pages to composing answers.

Google's Dual Semantic System: Gecko and Jetstream

Google's AI Mode doesn't rely on a single semantic model. Evidence from Google Cloud Discovery Engine reveals two distinct systems working in parallel:

Gecko: Embedding Similarity

Gecko is Google's embedding model that measures vector similarity between queries and content. It converts both your content and the user's query into mathematical representations (embeddings) and calculates how closely they align in semantic space.

Think of Gecko as answering: "How similar is this content to what the user asked?"

Gecko excels at:

- Matching topically related content to queries

- Understanding synonyms and related concepts

- Capturing general semantic alignment

Jetstream: Cross Attention with Negation Handling

Jetstream is a cross attention model that processes the query and document together rather than comparing pre computed vectors. Google's documentation notes it "better understands context and negation compared to embeddings."

Think of Jetstream as answering: "Does this content actually address what the user needs, including what they want to avoid?"

Jetstream excels at:

- Understanding context dependent meaning

- Processing negation ("not," "without," "excluding")

- Handling complex queries where intent shifts based on modifiers

Why Negation Handling Matters

Traditional embedding models struggle with negation. The vectors for "best CRM for startups" and "best CRM not for startups" are nearly identical because they contain the same words. Jetstream's cross attention architecture processes the relationship between query terms, recognizing that "not" fundamentally changes the intent.

This has direct implications for how you structure content.

Optimization Implications

- Explicitly state what your product or solution is NOT. If you're writing about project management software, include statements like "Unlike spreadsheet based tracking, [Product] provides..." or "This approach works for teams that need X, not organizations requiring Y."

- Address exclusionary queries directly. Users often search with negation: "CRM without per seat pricing" or "project management tool not Asana." Content that explicitly addresses these exclusions has an advantage with Jetstream.

- Create comparison content with clear distinctions. Rather than vague differentiation, use explicit contrast: "[Product] handles X. It does not handle Y. For Y, consider [Alternative]."

- Structure FAQs around what you don't do. Include questions like "What [Product] doesn't include" or "Who shouldn't use [Product]" to capture negation queries.

Example Implementation

Instead of: "Our CRM is designed for growing businesses with flexible pricing."

Write: "Our CRM is designed for growing businesses, not enterprise organizations with complex compliance requirements. Unlike per seat pricing models, our approach doesn't penalize you for adding team members."

The second version gives Jetstream explicit signals about what the product is and isn't, who it serves and doesn't serve.

Modern SEO Ranking Factors in the Era of AI Mode

1. Semantic Relevance and Intent Mapping

Google ranks content based on how well it aligns with both the explicit query and the inferred task or intent behind it.

- Latent Intent Coverage: Google favors content that satisfies sub-intents derived from the original query via semantic decomposition (e.g., comparisons, use-case specificity).

- Vector Similarity: Gemini models evaluate how closely content semantically matches internal synthetic queries, prioritizing definition-rich, high-fidelity passages.

- Modular Content Blocks: Google selects individual content chunks (not full pages) that match user needs, often in 150–300 word standalone sections.

2. Content Structure and Layout

Content is ranked higher when it follows predictable, chunk-based structures optimized for generative rendering.

- Hierarchical Formatting: Clear headings, use-case subheads, and organized FAQs make content easier to parse and cite.

- Fragmentation for Retrieval: Task-specific segments (e.g., pros/cons, how-tos, comparisons) improve extractability during synthesis.

- Preferred Layouts: Google favors formats that fit LLM-generated summaries, like bulleted lists, numbered steps, and concise answers.

3. Brand Visibility and Web Signals

Google’s models incorporate brand strength and presence as part of trust and authority evaluation.

- Branded Search Behavior: Volume and diversity of branded queries signal authority and user familiarity.

- Citation Frequency & Location: Mentions in authoritative, semantically aligned content increase retrieval and citation likelihood.

- Sentiment Analysis: Positive surrounding context about a brand contributes to trust in AI-generated answers.

- UGC & Social Proof: Signals from forums, Reddit, and social platforms support reputation scoring and content recall.

4. Source Credibility and Trustworthiness

Content credibility is assessed through multiple E-E-A-T proxies.

- Author Attribution & Experience: Verified authorship, expert credentials, and real-world experience support trust scores.

- Source Referencing: Pages citing high-authority sources (external and internal) are more likely to be trusted and cited.

- Factual Precision: Statements that are declarative, data-driven, and independently verifiable are prioritized for inclusion.

5. Content Freshness and Recency

Recency remains a key relevance signal in Google’s generative systems.

- Update Frequency: Pages with recent edits and refreshed metadata rank higher in time-sensitive queries.

- Last Modified Indicators: Google surfaces content that transparently communicates its currency (e.g., “Updated June 2025”).

6. Topical Authority and Coverage Depth

Google ranks domains that demonstrate comprehensive and consistent coverage of a topic.

- Content Clustering: Sites that build semantically interlinked hubs of related content demonstrate stronger topical authority.

- Original Contributions: Proprietary frameworks, original insights, and unique data are favored over regurgitated summaries.

- Entity Contextualization: Rich use of named entities and schema helps Google associate content with relevant knowledge graph topics.

7. Technical Accessibility and Indexing

Indexability remains foundational for eligibility in AI-generated responses.

- HTML Priority: Google prefers content that renders in the initial DOM, static HTML is prioritized over JS-heavy implementations.

- Performance & UX: Mobile-friendly, fast-loading content contributes to both human usability and AI readability.

- Crawler Access: Pages must be accessible to AI bots (e.g., Googlebot, GPTBot, PerplexityBot) to be discoverable and included.

-

8. Multimodal and Structured Input Compatibility

Google ranks content higher when it supports multi-format interpretation and synthesis.

- Multimodal Support: Content that includes charts, images, videos, and tables improves the richness and usability of responses.

- Cross-Modal Alignment: Text must semantically reinforce the meaning of visuals to improve retrieval and composability.

- Structured Media Metadata: Alt-text, captions, and transcripts enable AI systems to parse and reference non-textual elements effectively.

How to Optimize for AI Mode

The new rule of visibility: If your content isn’t semantically aligned, chunk-structured, and context-aware, it won’t be seen.

Here's a breakdown of key strategies:

1. Think in Chunks, Not Pages

What Google Evaluates:

AI Mode retrieves passages, not full pages. Each passage is assessed on its semantic precision, standalone utility, and retrievability.

Google Cloud Discovery Engine's chunking configuration reveals a maximum chunk size of 500 tokens (approximately 375 words). This isn't a fixed size but a ceiling: chunks can be smaller, but retrieval units won't exceed this limit.

What This Means

When AI Mode retrieves content to synthesize answers, it's pulling chunks capped at roughly 375 words maximum. Any information that spans beyond this limit risks being split across chunks, potentially losing coherence or being retrieved incompletely.

SaaS Action Plan:

- Engineer content at the passage level for both semantic similarity and to be LLM-preferred.

- Break content into around 375 word blocks, each answering a unique user intent.

- Front load content, snippets appear to be limited to 160 characters.

- Use clear H2s/H3s that reflect sub-intents: “Pricing breakdown”, “How [Product] integrates with Slack”, “Pros & cons of [tool]”.

- Add TL;DR summaries or executive intros above fold.

- Build each content asset like a knowledge card, not a blog post.

Example: Your “Pricing” page should be a modular set of cards by plan, feature, and use case not just a table with a paragraph

2. Format for Generative Integration

What Google Prefers:

AI Mode uses templated response structures: comparisons, lists, pros/cons, feature matrices, definitions, etc favoring functional structure over editorial length.

Google Cloud Discovery Engine's chunking configuration includes an option to "include ancestor headings in chunks." When enabled, each retrieved chunk carries its full heading hierarchy as context.

SaaS Action Plan:

- Use preformatted blocks:

- Tables → for feature comparisons, integrations, or plan tiers

- Lists → for benefits, use cases, setup steps

- FAQs → for direct, semantically focused answers

- Treat headings as metadata, not just visual breaks. Your H2s and H3s aren't just for readers scanning the page. They're labels that travel with your content during AI retrieval.

- Add schema markup: FAQPage, HowTo, Product, WebPage, Article.

- Implement content freshness signals, add visible “Last updated” metadata

Example: Don’t bury “CRM integrations” in a paragraph. Use a bulleted list titled “Works seamlessly with…”

3. Engineer for Query Fan-Out

What Google Does:

AI Mode breaks each query into synthetic sub-questions using LLM inference. Each sub-intent is matched to a chunk.

SaaS Action Plan:

- Understand and anticipate synthetic query landscapes.

- Structure content for multi-intent resolution:

- “What is [tool]”

- “How to use [tool]”

- “Best [tool] for [persona]”

- “Alternatives to [tool]”

- Build pages that cover full decision journeys with internal jump links.

- Use semantic anchors and HTML headings to define each intent block.

Example: Your product page should include “What is it?”, “Who it’s for?”, “Setup steps”, “Alternatives”, and “FAQs” all in separate blocks.

4. Optimize for Semantic Salience, Not Keywords

What Google Analyzes:

AI Mode scores content by salience, specificity, and semantic proximity not exact match terms.

SaaS Action Plan:

- Use clear, high-precision definitions of your product, features, and outcomes.

- Optimize for semantic similarity and triple clarity, subject–predicate–object.

- Align writing with task-based phrasing: “How to set up”, “Why it matters”, “What’s included”.

- Use latent query formats that AI might fan out into:

- “What’s the ROI of [tool] for remote teams?”

- “Is [tool] secure for enterprise?”

Example: Say “We support SAML SSO for secure enterprise onboarding,” not “our tool is secure and easy to use.”

5. Prioritize EEAT Signals to Influence Inclusion and Trust

What Google Prioritizes:

E-E-A-T influences the likelihood that your content is retrieved, trusted, and cited during synthesis. Google’s AI systems weigh the overall credibility of your site, content format, and authorship when deciding which sources to draw from.

SaaS Action Plan:

- Establish Author Expertise & Experience:

- Include expert bylines, bios, and LinkedIn links for blog and help content.

- Highlight your leadership team, certifications, and brand milestones on the About page.

- Showcase Experience and Results:

- Reference usage stats (e.g., “used by 12,000+ SaaS teams”) and real-world outcomes.

- Include customer logos, case studies, and quotes from practitioners.

- Reinforce Authority & Trust Through Structure:

- Link to reputable external sources.

- Use structured data: Organization, Product, Person, WebPage, and Review.

- Add clear timestamps, update logs, and references to current-year data.

Example: Instead of saying “We’re trusted,” cite the number of reviews on G2, include analyst quotes, or highlight that you’re ISO certified.

6. Increase Brand Visibility Beyond Your Site

What Google Connects:

Citation patterns, UGC signals, and brand mentions all increase retrieval and inclusion likelihood in AI Overviews and AI Mode.

SaaS Action Plan:

- Appear and influence user search behavior in other channels.

- Promote brand visibility across:

- Reddit, Quora, Stack Overflow

- YouTube and LinkedIn thought leadership

- Seed use cases and comparisons (“[Brand] vs [Competitor]”) in forums and curated answer communities.

- Earn unlinked mentions and co-citations.

Example: A single Reddit thread titled “Why we switched to [Your SaaS] from [Big name]” can be default context for your brand in generative search.

AI Mode Optimization Checklist

Use this to evaluate whether your content is AI Mode ready for both visibility and citability in Google’s generative search experience:

Think in blocks, not blogs. Your goal is to be included, not just indexed. If each section isn’t composable, scannable, and semantically tight it’s less likely to make it into AI Mode answers.

AI Mode FAQs

1. What is Google AI Mode?

Google AI Mode is an AI-powered search experience that provides conversational, multimodal answers instead of a traditional list of blue links. It uses Google’s Gemini LLMs to synthesize personalized responses from structured and unstructured data sources.

2. How does AI Mode differ from normal Google Search?

Unlike traditional search that ranks documents, AI Mode composes answers. It breaks down your query into sub-questions, retrieves relevant information, and generates a natural language response using advanced AI models.

3. What is “query fan-out” in AI Mode?

Query fan-out is the process where a single user query is split into multiple sub-queries. Each sub-query targets a different facet of user intent and is processed independently to construct a more complete, synthesized answer.

4. Is AI Mode available to everyone?

As of mid-2025, AI Mode is available to all users in the U.S., with rollout underway in other regions like the UK and India. It’s currently accessible via the “AI Overview” or “AI Mode” tab in Google Search.

5. Can AI Mode replace traditional search entirely?

Not yet. AI Mode is still considered experimental and complements traditional search. Google has confirmed that web results will continue to be a core part of the experience to support transparency, exploration, and content discovery.

6. How does AI Mode choose what content to show?

AI Mode selects content based on semantic relevance, factuality, format, and source trustworthiness. It prefers well-structured, declarative, and high-authority content, often at the chunk or passage level, not entire pages.

7. Will AI Mode reduce website traffic?

Yes, in many cases. Because answers are surfaced directly in the interface, users may not need to click through to websites. This leads to zero-click searches and lower traditional CTR, especially for informational queries.

8. How can I optimize for visibility in AI Mode?

To increase visibility in AI Mode:

- Structure content in modular chunks (150–300 words)

- Use clear H2s, FAQs, and TL;DR summaries

- Add schema markup (FAQPage, HowTo, Product)

- Write with semantic precision and topical authority

9. Does AI Mode cite sources?

Yes, but only when the system has high confidence in the factual accuracy and value of a source. Citations are selectively shown, often inline, and are more likely to appear for structured, well-attributed content.

10. Is AI Mode the same as Search Generative Experience (SGE)?

SGE was the experimental precursor to AI Mode. In 2024, Google rebranded and rebuilt it as “AI Overviews” and later introduced AI Mode as the full-screen, chat-style version with deeper personalization and multimodal inputs.