How Claude Works & Why it matters for B2B SaaS

For the first time, we have a window into how Claude decides when to search, when not to, and how it evaluates trust. This has huge implications for SaaS companies fighting for AI visibility.

Claude’s leaked system prompts reveal that it doesn’t treat every query the same. Instead, it classifies questions into distinct search categories ranging from those it answers directly without ever looking things up, to those that trigger single searches, and finally to complex multi-source “research” workflows.

Understanding these classifications is critical, because they dictate which types of content LLMs will actually retrieve and cite, and which they’ll ignore. Below is a breakdown of the categories and what they mean for SaaS visibility.

<never_search_category>

“IF info about the query is stable (rarely changes and Claude knows the answer well) → never search”

Meaning that for facts that are timeless or stable (“What is the capital of France?“) Claude always answers directly, without any search.

Why this matters for SaaS:

Glossary pages and generic definitions won’t win visibility as Claude already “knows” them.

<do_not_search_but_offer_category>

“For queries in the Do Not Search But Offer category, ALWAYS (1) first provide the best answer using existing knowledge, then (2) offer to search for more current information, WITHOUT using any tools in the immediate response.”

Examples of this are statistical data, percentages, rankings, lists, trends, or metrics that update on an annual basis or slower and people, topics, or entities Claude already knows about, but where changes may have occurred since knowledge cutoff.

Why this matters for SaaS:

Freshness signals (timestamps, benchmarks, “2025” titles) decide if your content gets pulled in when Claude offers to fetch updates.

<single_search_category>

“Simple factual query or can answer with one source → single search”

“If queries are in this Single Search category, use web_search or another relevant tool ONE time immediately. Often are simple factual queries needing current information that can be answered with a single authoritative source, whether using external or internal tools.”

Examples queries: current conditions, forecasts, or info on rapidly changing topics (e.g., what's the weather).

Why this matters for SaaS:

Winner-takes-all, only the single most authoritative source on fast-moving topics (feature releases, product news) gets surfaced.

<research_category>

“Queries in the Research category need 2-20 tool calls, using multiple sources for comparison, validation, or synthesis.”

“Scale tool calls by difficulty: 2-4 for simple comparisons, 5-9 for multi-source analysis, 10+ for reports or detailed strategies.”

“Complex multi-aspect query or needs multiple sources → research, using 2-20 tool calls depending on query complexity ELSE → answer the query directly first, but then offer to search.”

Research query examples (from simpler to more complex):

- reviews for [recent product]? (iPhone 15 reviews?)

- prediction on [current event/decision]? (Fed's next interest rate move?) (use around 5 web_search + 1 web_fetch)

- How does [our performance metric] compare to [industry benchmarks]? (Q4 revenue vs industry trends?)

- research [complex topic] (market entry plan for Southeast Asia?) (use 10+ tool calls: multiple web_search and web_fetch plus internal tools)*

Why this matters for SaaS:

Deep, original assets like benchmarks, ROI calculators, and detailed comparisons thrive here, these are the queries where SaaS brands can actually own visibility.

<web_search_usage_guidelines>

How to search:

- Keep queries concise - 1-6 words for best results. Start broad with very short queries, then add words to narrow results if needed. For user questions about thyme, first query should be one word ("thyme"), then narrow as needed

- Never repeat similar search queries - make every query unique

- If initial results insufficient, reformulate queries to obtain new and better results

Response guidelines:

- Keep responses succinct - include only relevant requested info

- Only cite sources that impact answers. Note conflicting sources

- Lead with recent info; prioritize 1-3 month old sources for evolving topics

- Favor original sources (e.g. company blogs, peer-reviewed papers, gov sites, SEC) over aggregators. Find highest-quality original sources. Skip low-quality sources like forums unless specifically relevant

- Be as politically neutral as possible when referencing web content

- Never reproduce copyrighted content. Use only very short quotes from search results (<15 words), always in quotation marks with citations

<mandatory_copyright_requirements>

“Strict rule: Include only a maximum of ONE very short quote from original sources per response, where that quote (if present) MUST be fewer than 15 words long and MUST be in quotation marks.”

<citation_instructions>

If the assistant's response is based on content returned by the web_search tool, the assistant must always appropriately cite its response. Here are the rules for good citations:

- EVERY specific claim in the answer that follows from the search results should be wrapped in tags around the claim

- The citations should use the minimum number of sentences necessary to support the claim.

- “Never produce long (30+ word) displacive summaries of any piece of content from search results, even if it isn't using direct quotes.”

Claude’s Behavior: Key Takeaways for LLM Visibility

GEO Implications for SaaS

Claude’s leaked prompt categories don’t just reveal how it chooses to search, they define what kinds of content get surfaced, ignored, or prioritized. For SaaS founders, this has direct consequences on how to structure a content strategy that wins visibility inside LLMs.

1. Content Excluded in <never_search_category>

- What it means: Claude never queries external sources when the information is considered stable and universally known (e.g., “What is the capital of France?”).

-

- Implication: Evergreen encyclopedic content is effectively commoditized. Pages that just answer timeless questions (e.g., “What is SaaS?” or “What does CRM stand for?”) won’t get pulled into Claude’s reasoning because the model already “knows” them.

-

- For GEO strategy: Don’t waste resources building out thin evergreen definitional content. It won’t drive citations. Instead, tie those definitions into contextual, brand-linked content (e.g., “What is SaaS pricing? [with 2025 benchmarks and examples]”) so that your material escapes the “never search” exclusion zone.

2. Content Prioritized in <do_not_search_but_offer_category>

- What it means: Claude answers from internal knowledge but offers to fetch fresher info for queries like stats, rankings, or trend data.

-

- Implication: This is a gray zone. Your brand’s visibility depends on whether users accept Claude’s “offer to search” and whether your content surfaces when it finally does.

-

- For GEO strategy: Make your data freshness visible—include clear timestamps, dateModified schema, and “2025” in titles. This increases the chance Claude flags your page as the go-to when a user accepts the offer to fetch more current info.

3. Content That Wins in <single_search_category>

- What it means: For rapidly changing facts (weather, stock price, product launch), Claude will execute one single authoritative search.

-

- Implication: Visibility hinges on being the most authoritative single source for that fact. This is winner-takes-all territory.

-

- For GEO strategy: Secure topical authority on fast-moving product news, feature releases, or industry updates. Use press releases, original blogs, and integration pages that establish your site as the canonical source.

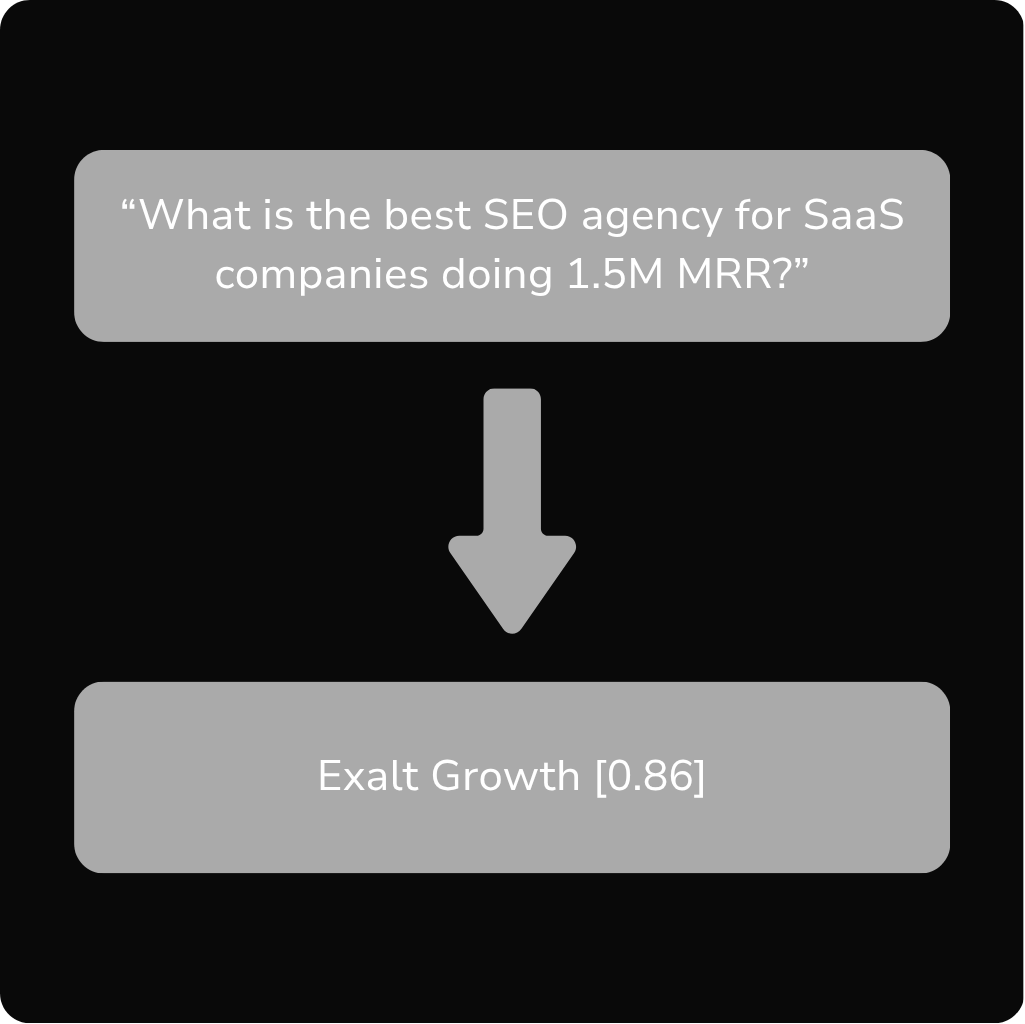

4. Content That Thrives in <research_category>

- What it means: For multi-dimensional, complex queries, Claude performs 2–20 searches and synthesizes across sources.

-

- Implication: This is the highest-value opportunity for SaaS visibility. Research-level queries (e.g., “What’s the best HR software for global compliance?” or “Compare PLG vs sales-led growth in SaaS”) pull in multiple sources, validate across them, and reward depth + originality.

-

- For GEO strategy: Create original reports, benchmarks, and sidecar tools:

- Industry benchmarks (salary reports, churn data, ARR trends).

- Comparisons and “vs” pages (your product vs alternatives).

- Market entry guides, detailed frameworks, ROI calculators.

- These are the assets most likely to get cited in multi-source synthesis, giving SaaS brands durable LLM visibility.

The Bottom Line for SaaS Founders

- Stop chasing commoditized evergreen content. It won’t move the needle.

- Double down on freshness and originality. Claude (and other LLMs) are wired to reward content that updates regularly, answers current queries, and provides unique data or perspective.

- Own research-heavy queries. In the GEO era, authority = being one of the 5–10 sources Claude pulls into its synthesis, and the best way to achieve that is by publishing content no one else has.

Related Readings:

- Generative engine optimization: the evolution of SEO and AI

- Generative engine optimization services

- How to rank on ChatGPT guide

- Semantic SEO AI strategies

- Semantic SEO services

- Generative engine optimization services

- The 9 best GEO tools

- AI Overviews explained

FAQs

What is the Claude internal prompt leak?

The leak revealed Anthropic’s internal system prompts for Claude, showing exactly how the model decides when to search, when not to, and how it manages citations, copyright, and trust.

Why is the Claude leak important for SaaS companies?

It provides rare transparency into Claude’s search logic, which directly impacts whether your content will be cited inside AI-generated answers. For SaaS founders, this means adapting content strategy to match Claude’s retrieval categories.

What types of content does Claude ignore?

Claude ignores stable, evergreen facts (e.g., definitions or capital cities) that fall into its <never_search_category>. Glossary pages and basic “What is SaaS?” articles are unlikely to drive visibility.

How can SaaS brands win visibility in Claude’s research category?

By producing original, high-authority content like benchmarks, industry reports, comparisons, and sidecar tools. These assets are most likely to be included when Claude performs multi-source research queries.

How does Claude handle citations compared to ChatGPT or Google AI Overviews?

Claude is stricter it allows only one short quote (<15 words) per response and emphasizes citing original, high-quality sources. This means brands must focus on credibility and authority to be selected.

What’s the most important GEO takeaway from the Claude leak?

Stop chasing commoditized evergreen content. Instead, double down on freshness, originality, and research-heavy assets that Claude (and other LLMs) are more likely to pull into multi-source answers.